PostgreSQL 7.3.2 Reference Manual

The PostgreSQL Global Development Group

PostgreSQL 7.3.2 Reference Manual

by The PostgreSQL Global Development Group

Copyright © 1996-2002 by The PostgreSQL Global Development Group

Legal Notice

PostgreSQL is Copyright © 1996-2002 by the PostgreSQL Global Development Group and is distributed under the terms of the license of the

University of California below.

Postgres95 is Copyright © 1994-5 by the Regents of the University of California.

Permission to use, copy, modify, and distribute this software and its documentation for any purpose, without fee, and without a written

agreement is hereby granted, provided that the above copyright notice and this paragraph and the following two paragraphs appear in all

copies.

IN NO EVENT SHALL THE UNIVERSITY OF CALIFORNIA BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL,

INCIDENTAL, OR CONSEQUENTIAL DAMAGES, INCLUDING LOST PROFITS, ARISING OUT OF THE USE OF THIS SOFTWARE

AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF CALIFORNIA HAS BEEN ADVISED OF THE POSSIBILITY OF

SUCH DAMAGE.

THE UNIVERSITY OF CALIFORNIA SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO,

THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE SOFTWARE PRO-

VIDED HEREUNDER IS ON AN “AS-IS” BASIS, AND THE UNIVERSITY OF CALIFORNIA HAS NO OBLIGATIONS TO PROVIDE

MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

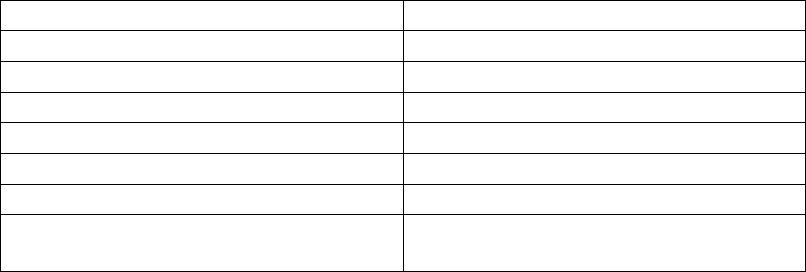

Table of Contents

Preface ..................................................................................................................................................i

I. SQL Commands..............................................................................................................................1

ABORT .......................................................................................................................................1

ALTER DATABASE...................................................................................................................1

ALTER GROUP..........................................................................................................................1

ALTER TABLE...........................................................................................................................1

ALTER TRIGGER......................................................................................................................1

ALTER USER.............................................................................................................................1

ANALYZE ..................................................................................................................................1

BEGIN ........................................................................................................................................1

CHECKPOINT ...........................................................................................................................1

CLOSE........................................................................................................................................1

CLUSTER...................................................................................................................................1

COMMENT ................................................................................................................................1

COMMIT ....................................................................................................................................1

COPY..........................................................................................................................................1

CREATE AGGREGATE.............................................................................................................1

CREATE CAST ..........................................................................................................................1

CREATE CONSTRAINT TRIGGER.........................................................................................1

CREATE CONVERSION...........................................................................................................1

CREATE DATABASE ................................................................................................................1

CREATE DOMAIN....................................................................................................................1

CREATE FUNCTION ................................................................................................................1

CREATE GROUP .......................................................................................................................1

CREATE INDEX........................................................................................................................1

CREATE LANGUAGE...............................................................................................................1

CREATE OPERATOR................................................................................................................1

CREATE OPERATOR CLASS...................................................................................................1

CREATE RULE ..........................................................................................................................1

CREATE SCHEMA....................................................................................................................1

CREATE SEQUENCE................................................................................................................1

CREATE TABLE........................................................................................................................1

CREATE TABLE AS..................................................................................................................1

CREATE TRIGGER ...................................................................................................................1

CREATE TYPE...........................................................................................................................1

CREATE USER ..........................................................................................................................1

CREATE VIEW..........................................................................................................................1

DEALLOCATE...........................................................................................................................1

DECLARE ..................................................................................................................................1

DELETE......................................................................................................................................1

DROP AGGREGATE .................................................................................................................1

DROP CAST...............................................................................................................................1

DROP CONVERSION ...............................................................................................................1

DROP DATABASE.....................................................................................................................1

DROP DOMAIN.........................................................................................................................1

DROP FUNCTION.....................................................................................................................1

DROP GROUP............................................................................................................................1

DROP INDEX.............................................................................................................................1

DROP LANGUAGE ...................................................................................................................1

iii

DROP OPERATOR.....................................................................................................................1

DROP OPERATOR CLASS.......................................................................................................1

DROP RULE...............................................................................................................................1

DROP SCHEMA.........................................................................................................................1

DROP SEQUENCE ....................................................................................................................1

DROP TABLE.............................................................................................................................1

DROP TRIGGER........................................................................................................................1

DROP TYPE ...............................................................................................................................1

DROP USER...............................................................................................................................1

DROP VIEW...............................................................................................................................1

END ............................................................................................................................................1

EXECUTE ..................................................................................................................................1

EXPLAIN....................................................................................................................................1

FETCH........................................................................................................................................1

GRANT.......................................................................................................................................1

INSERT.......................................................................................................................................1

LISTEN.......................................................................................................................................1

LOAD..........................................................................................................................................1

LOCK..........................................................................................................................................1

MOVE.........................................................................................................................................1

NOTIFY ......................................................................................................................................1

PREPARE....................................................................................................................................1

REINDEX...................................................................................................................................1

RESET ........................................................................................................................................1

REVOKE.....................................................................................................................................1

ROLLBACK................................................................................................................................1

SELECT......................................................................................................................................1

SELECT INTO............................................................................................................................1

SET..............................................................................................................................................1

SET CONSTRAINTS.................................................................................................................1

SET SESSION AUTHORIZATION...........................................................................................1

SET TRANSACTION.................................................................................................................1

SHOW.........................................................................................................................................1

START TRANSACTION............................................................................................................1

TRUNCATE................................................................................................................................1

UNLISTEN.................................................................................................................................1

UPDATE......................................................................................................................................1

VACUUM....................................................................................................................................1

II. PostgreSQL Client Applications...................................................................................................4

clusterdb......................................................................................................................................5

createdb.......................................................................................................................................8

createlang ..................................................................................................................................11

createuser ..................................................................................................................................14

dropdb .......................................................................................................................................17

droplang ....................................................................................................................................20

dropuser.....................................................................................................................................22

ecpg...........................................................................................................................................25

pg_config...................................................................................................................................27

pg_dump....................................................................................................................................29

pg_dumpall................................................................................................................................36

pg_restore..................................................................................................................................39

iv

psql............................................................................................................................................46

pgtclsh.......................................................................................................................................68

pgtksh........................................................................................................................................69

vacuumdb..................................................................................................................................70

III. PostgreSQL Server Applications ..............................................................................................73

initdb .........................................................................................................................................74

initlocation ................................................................................................................................77

ipcclean .....................................................................................................................................78

pg_ctl.........................................................................................................................................79

pg_controldata...........................................................................................................................83

pg_resetxlog..............................................................................................................................84

postgres .....................................................................................................................................86

postmaster .................................................................................................................................90

v

Preface

The entries in this Reference Manual are meant to provide in reasonable length an authoritative,

complete, and formal summary about their respective subjects. More information about the use of

PostgreSQL, in narrative, tutorial, or example form, may be found in other parts of the PostgreSQL

documentation set. See the cross-references listed on each reference page.

The Reference Manual entries are also available as traditional “man” pages.

i

I. SQL Commands

This part contains reference information for the SQL commands supported by PostgreSQL. By “SQL”

the language in general is meant; information about the standards conformance and compatibility of

each command can be found on the respective reference page.

ABORT

Name

ABORT — abort the current transaction

Synopsis

ABORT [ WORK | TRANSACTION ]

Inputs

None.

Outputs

ROLLBACK

Message returned if successful.

WARNING: ROLLBACK: no transaction in progress

If there is not any transaction currently in progress.

Description

ABORT rolls back the current transaction and causes all the updates made by the transaction to be

discarded. This command is identical in behavior to the SQL92 command ROLLBACK, and is present

only for historical reasons.

Notes

Use COMMIT to successfully terminate a transaction.

Usage

To abort all changes:

ABORT WORK;

1

ABORT

Compatibility

SQL92

This command is a PostgreSQL extension present for historical reasons. ROLLBACK is the SQL92

equivalent command.

2

ALTER DATABASE

Name

ALTER DATABASE — change a database

Synopsis

ALTER DATABASE name SET variable { TO | = } { value | DEFAULT }

ALTER DATABASE name RESET variable

Description

ALTER DATABASE is used to change the session default of a run-time configuration variable for a

PostgreSQL database. Whenever a new session is subsequently started in that database, the specified

value becomes the session default value. The database-specific default overrides whatever setting is

present in postgresql.conf or has been received from the postmaster.

Only a superuser or the database owner can change the session defaults for a database.

Parameters

name

The name of the database whose session defaults are to be altered.

variable

value

Set the session default for this database of the specified configuration variable to the given value.

If value is DEFAULT or, equivalently, RESET is used, the database-specific variable setting is

removed and the system-wide default setting will be inherited in new sessions. Use RESET ALL

to clear all settings.

See SET and the Administrator’s Guide for more information about allowed variable names and

values.

Diagnostics

ALTER DATABASE

Message returned if the alteration was successful.

ERROR: database "dbname" does not exist

Error message returned if the specified database is not known to the system.

1

ALTER DATABASE

Notes

Using ALTER USER, it is also possible to tie a session default to a specific user rather than a database.

User-specific settings override database-specific ones if there is a conflict.

Examples

To disable index scans by default in the database test:

ALTER DATABASE test SET enable_indexscan TO off;

Compatibility

The ALTER DATABASE statement is a PostgreSQL extension.

See Also

ALTER USER, CREATE DATABASE, DROP DATABASE, SET

2

ALTER GROUP

Name

ALTER GROUP — add users to a group or remove users from a group

Synopsis

ALTER GROUP name ADD USER username [, ... ]

ALTER GROUP name DROP USER username [, ... ]

Inputs

name

The name of the group to modify.

username

Users which are to be added or removed from the group. The user names must exist.

Outputs

ALTER GROUP

Message returned if the alteration was successful.

Description

ALTER GROUP is used to add or remove users from a group. Only database superusers can use this

command. Adding a user to a group does not create the user. Similarly, removing a user from a group

does not drop the user itself.

Use CREATE GROUP to create a new group and DROP GROUP to remove a group.

Usage

Add users to a group:

ALTER GROUP staff ADD USER karl, john;

Remove a user from a group:

ALTER GROUP workers DROP USER beth;

1

ALTER GROUP

Compatibility

SQL92

There is no ALTER GROUP statement in SQL92. The concept of roles is similar.

2

ALTER TABLE

Name

ALTER TABLE — change the definition of a table

Synopsis

ALTER TABLE [ ONLY ] table [ * ]

ADD [ COLUMN ] column type [ column_constraint [ ... ] ]

ALTER TABLE [ ONLY ] table [ * ]

DROP [ COLUMN ] column [ RESTRICT | CASCADE ]

ALTER TABLE [ ONLY ] table [ * ]

ALTER [ COLUMN ] column { SET DEFAULT value | DROP DEFAULT }

ALTER TABLE [ ONLY ] table [ * ]

ALTER [ COLUMN ] column { SET | DROP } NOT NULL

ALTER TABLE [ ONLY ] table [ * ]

ALTER [ COLUMN ] column SET STATISTICS integer

ALTER TABLE [ ONLY ] table [ * ]

ALTER [ COLUMN ] column SET STORAGE { PLAIN | EXTERNAL | EXTENDED | MAIN }

ALTER TABLE [ ONLY ] table [ * ]

RENAME [ COLUMN ] column TO new_column

ALTER TABLE table

RENAME TO new_table

ALTER TABLE [ ONLY ] table [ * ]

ADD table_constraint

ALTER TABLE [ ONLY ] table [ * ]

DROP CONSTRAINT constraint_name [ RESTRICT | CASCADE ]

ALTER TABLE table

OWNER TO new_owner

Inputs

table

The name (possibly schema-qualified) of an existing table to alter. If ONLY is specified, only that

table is altered. If ONLY is not specified, the table and all its descendant tables (if any) are updated.

* can be appended to the table name to indicate that descendant tables are to be scanned, but in

the current version, this is the default behavior. (In releases before 7.1, ONLY was the default

behavior.) The default can be altered by changing the SQL_INHERITANCE configuration option.

column

Name of a new or existing column.

type

Type of the new column.

new_column

New name for an existing column.

new_table

New name for the table.

1

ALTER TABLE

table_constraint

New table constraint for the table.

constraint_name

Name of an existing constraint to drop.

new_owner

The user name of the new owner of the table.

CASCADE

Automatically drop objects that depend on the dropped column or constraint (for example, views

referencing the column).

RESTRICT

Refuse to drop the column or constraint if there are any dependent objects. This is the default

behavior.

Outputs

ALTER TABLE

Message returned from column or table renaming.

ERROR

Message returned if table or column is not available.

Description

ALTER TABLE changes the definition of an existing table. There are several sub-forms:

ADD COLUMN

This form adds a new column to the table using the same syntax as CREATE TABLE.

DROP COLUMN

This form drops a column from a table. Note that indexes and table constraints involving the

column will be automatically dropped as well. You will need to say CASCADE if anything outside

the table depends on the column --- for example, foreign key references, views, etc.

SET/DROP DEFAULT

These forms set or remove the default value for a column. Note that defaults only apply to

subsequent INSERT commands; they do not cause rows already in the table to change. Defaults

may also be created for views, in which case they are inserted into INSERT statements on the

view before the view’s ON INSERT rule is applied.

2

ALTER TABLE

SET/DROP NOT NULL

These forms change whether a column is marked to allow NULL values or to reject NULL

values. You may only SET NOT NULL when the table contains no null values in the column.

SET STATISTICS

This form sets the per-column statistics-gathering target for subsequent ANALYZE operations.

The target can be set in the range 0 to 1000; alternatively, set it to -1 to revert to using the system

default statistics target.

SET STORAGE

This form sets the storage mode for a column. This controls whether this column is held inline

or in a supplementary table, and whether the data should be compressed or not. PLAIN must be

used for fixed-length values such as INTEGER and is inline, uncompressed. MAIN is for inline,

compressible data. EXTERNAL is for external, uncompressed data and EXTENDED is for external,

compressed data. EXTENDED is the default for all data types that support it. The use of EXTERNAL

will make substring operations on a TEXT column faster, at the penalty of increased storage

space.

RENAME

The RENAME forms change the name of a table (or an index, sequence, or view) or the name of

an individual column in a table. There is no effect on the stored data.

ADD table_constraint

This form adds a new constraint to a table using the same syntax as CREATE TABLE.

DROP CONSTRAINT

This form drops constraints on a table. Currently, constraints on tables are not required to have

unique names, so there may be more than one constraint matching the specified name. All such

constraints will be dropped.

OWNER

This form changes the owner of the table, index, sequence or view to the specified user.

You must own the table to use ALTER TABLE; except for ALTER TABLE OWNER, which may only be

executed by a superuser.

Notes

The keyword COLUMN is noise and can be omitted.

In the current implementation of ADD COLUMN, default and NOT NULL clauses for the new column

are not supported. The new column always comes into being with all values NULL. You can use the

SET DEFAULT form of ALTER TABLE to set the default afterwards. (You may also want to update

the already existing rows to the new default value, using UPDATE.) If you want to mark the column

non-null, use the SET NOT NULL form after you’ve entered non-null values for the column in all

rows.

The DROP COLUMN command does not physically remove the column, but simply makes it invisible

to SQL operations. Subsequent inserts and updates of the table will store a NULL for the column.

Thus, dropping a column is quick but it will not immediately reduce the on-disk size of your table, as

the space occupied by the dropped column is not reclaimed. The space will be reclaimed over time

as existing rows are updated. To reclaim the space at once, do a dummy UPDATE of all rows and then

vacuum, as in:

3

ALTER TABLE

UPDATE table SET col = col;

VACUUM FULL table;

If a table has any descendant tables, it is not permitted to ADD or RENAME a column in the parent

table without doing the same to the descendants --- that is, ALTER TABLE ONLY will be rejected.

This ensures that the descendants always have columns matching the parent.

A recursive DROP COLUMN operation will remove a descendant table’s column only if the descen-

dant does not inherit that column from any other parents and never had an independent definition

of the column. A nonrecursive DROP COLUMN (i.e., ALTER TABLE ONLY ... DROP COLUMN)

never removes any descendant columns, but instead marks them as independently defined rather than

inherited.

Changing any part of the schema of a system catalog is not permitted.

Refer to CREATE TABLE for a further description of valid arguments. The PostgreSQL User’s Guide

has further information on inheritance.

Usage

To add a column of type varchar to a table:

ALTER TABLE distributors ADD COLUMN address VARCHAR(30);

To drop a column from a table:

ALTER TABLE distributors DROP COLUMN address RESTRICT;

To rename an existing column:

ALTER TABLE distributors RENAME COLUMN address TO city;

To rename an existing table:

ALTER TABLE distributors RENAME TO suppliers;

To add a NOT NULL constraint to a column:

ALTER TABLE distributors ALTER COLUMN street SET NOT NULL;

To remove a NOT NULL constraint from a column:

ALTER TABLE distributors ALTER COLUMN street DROP NOT NULL;

4

ALTER TABLE

To add a check constraint to a table:

ALTER TABLE distributors ADD CONSTRAINT zipchk CHECK (char_length(zipcode) = 5);

To remove a check constraint from a table and all its children:

ALTER TABLE distributors DROP CONSTRAINT zipchk;

To add a foreign key constraint to a table:

ALTER TABLE distributors ADD CONSTRAINT distfk FOREIGN KEY (address) REF-

ERENCES addresses(address) MATCH FULL;

To add a (multicolumn) unique constraint to a table:

ALTER TABLE distributors ADD CONSTRAINT dist_id_zipcode_key UNIQUE (dist_id, zip-

code);

To add an automatically named primary key constraint to a table, noting that a table can only ever

have one primary key:

ALTER TABLE distributors ADD PRIMARY KEY (dist_id);

Compatibility

SQL92

The ADD COLUMN form is compliant with the exception that it does not support defaults and NOT

NULL constraints, as explained above. The ALTER COLUMN form is in full compliance.

The clauses to rename tables, columns, indexes, and sequences are PostgreSQL extensions from

SQL92.

5

ALTER TRIGGER

Name

ALTER TRIGGER — change the definition of a trigger

Synopsis

ALTER TRIGGER trigger ON table

RENAME TO newname

Inputs

trigger

The name of an existing trigger to alter.

table

The name of the table on which this trigger acts.

newname

New name for the existing trigger.

Outputs

ALTER TRIGGER

Message returned from trigger renaming.

ERROR

Message returned if trigger is not available, or new name is a duplicate of another existing trigger

on the table.

Description

ALTER TRIGGER changes the definition of an existing trigger. The RENAME clause causes the name

of a trigger on the given table to change without otherwise changing the trigger definition.

You must own the table on which the trigger acts in order to change its properties.

1

ALTER TRIGGER

Notes

Refer to CREATE TRIGGER for a further description of valid arguments.

Usage

To rename an existing trigger:

ALTER TRIGGER emp_stamp ON emp RENAME TO emp_track_chgs;

Compatibility

SQL92

The clause to rename triggers is a PostgreSQL extension from SQL92.

2

ALTER USER

Name

ALTER USER — change a database user account

Synopsis

ALTER USER username [ [ WITH ] option [ ... ] ]

where option can be:

[ ENCRYPTED | UNENCRYPTED ] PASSWORD ’password’

| CREATEDB | NOCREATEDB

| CREATEUSER | NOCREATEUSER

| VALID UNTIL ’abstime’

ALTER USER username SET variable { TO | = } { value | DEFAULT }

ALTER USER username RESET variable

Description

ALTER USER is used to change the attributes of a PostgreSQL user account. Attributes not mentioned

in the command retain their previous settings.

The first variant of this command in the synopsis changes certain global user privileges and authenti-

cation settings. (See below for details.) Only a database superuser can change privileges and password

expiration with this command. Ordinary users can only change their own password.

The second and the third variant change a user’s session default for a specified configuration variable.

Whenever the user subsequently starts a new session, the specified value becomes the session default,

overriding whatever setting is present in postgresql.conf or has been received from the postmas-

ter. Ordinary users can change their own session defaults. Superusers can change anyone’s session

defaults.

Parameters

username

The name of the user whose attributes are to be altered.

password

The new password to be used for this account.

ENCRYPTED

UNENCRYPTED

These key words control whether the password is stored encrypted in pg_shadow. (See CREATE

USER for more information about this choice.)

1

ALTER USER

CREATEDB

NOCREATEDB

These clauses define a user’s ability to create databases. If CREATEDB is specified, the user being

defined will be allowed to create his own databases. Using NOCREATEDB will deny a user the

ability to create databases.

CREATEUSER

NOCREATEUSER

These clauses determine whether a user will be permitted to create new users himself. This option

will also make the user a superuser who can override all access restrictions.

abstime

The date (and, optionally, the time) at which this user’s password is to expire.

variable

value

Set this user’s session default for the specified configuration variable to the given value. If value

is DEFAULT or, equivalently, RESET is used, the user-specific variable setting is removed and the

user will inherit the system-wide default setting in new sessions. Use RESET ALL to clear all

settings.

See SET and the Administrator’s Guide for more information about allowed variable names and

values.

Diagnostics

ALTER USER

Message returned if the alteration was successful.

ERROR: ALTER USER: user "username" does not exist

Error message returned if the specified user is not known to the database.

Notes

Use CREATE USER to add new users, and DROP USER to remove a user.

ALTER USER cannot change a user’s group memberships. Use ALTER GROUP to do that.

Using ALTER DATABASE, it is also possible to tie a session default to a specific database rather than

a user.

2

ALTER USER

Examples

Change a user password:

ALTER USER davide WITH PASSWORD ’hu8jmn3’;

Change a user’s valid until date:

ALTER USER manuel VALID UNTIL ’Jan 31 2030’;

Change a user’s valid until date, specifying that his authorization should expire at midday on 4th May

1998 using the time zone which is one hour ahead of UTC:

ALTER USER chris VALID UNTIL ’May 4 12:00:00 1998 +1’;

Give a user the ability to create other users and new databases:

ALTER USER miriam CREATEUSER CREATEDB;

Compatibility

The ALTER USER statement is a PostgreSQL extension. The SQL standard leaves the definition of

users to the implementation.

See Also

CREATE USER, DROP USER, SET

3

ANALYZE

Name

ANALYZE — collect statistics about a database

Synopsis

ANALYZE [ VERBOSE ] [ table [ (column [, ...] ) ] ]

Inputs

VERBOSE

Enables display of progress messages.

table

The name (possibly schema-qualified) of a specific table to analyze. Defaults to all tables in the

current database.

column

The name of a specific column to analyze. Defaults to all columns.

Outputs

ANALYZE

The command is complete.

Description

ANALYZE collects statistics about the contents of PostgreSQL tables, and stores the results in the

system table pg_statistic. Subsequently, the query planner uses the statistics to help determine

the most efficient execution plans for queries.

With no parameter, ANALYZE examines every table in the current database. With a parameter, ANA-

LYZE examines only that table. It is further possible to give a list of column names, in which case only

the statistics for those columns are updated.

1

ANALYZE

Notes

It is a good idea to run ANALYZE periodically, or just after making major changes in the contents of a

table. Accurate statistics will help the planner to choose the most appropriate query plan, and thereby

improve the speed of query processing. A common strategy is to run VACUUM and ANALYZE once a

day during a low-usage time of day.

Unlike VACUUM FULL, ANALYZE requires only a read lock on the target table, so it can run in parallel

with other activity on the table.

For large tables, ANALYZE takes a random sample of the table contents, rather than examining every

row. This allows even very large tables to be analyzed in a small amount of time. Note however that

the statistics are only approximate, and will change slightly each time ANALYZE is run, even if the

actual table contents did not change. This may result in small changes in the planner’s estimated costs

shown by EXPLAIN.

The collected statistics usually include a list of some of the most common values in each column and

a histogram showing the approximate data distribution in each column. One or both of these may be

omitted if ANALYZE deems them uninteresting (for example, in a unique-key column, there are no

common values) or if the column data type does not support the appropriate operators. There is more

information about the statistics in the User’s Guide.

The extent of analysis can be controlled by adjusting the default_statistics_target parameter

variable, or on a column-by-column basis by setting the per-column statistics target with ALTER TA-

BLE ALTER COLUMN SET STATISTICS (see ALTER TABLE). The target value sets the maximum

number of entries in the most-common-value list and the maximum number of bins in the histogram.

The default target value is 10, but this can be adjusted up or down to trade off accuracy of planner

estimates against the time taken for ANALYZE and the amount of space occupied in pg_statistic.

In particular, setting the statistics target to zero disables collection of statistics for that column. It may

be useful to do that for columns that are never used as part of the WHERE, GROUP BY, or ORDER

BY clauses of queries, since the planner will have no use for statistics on such columns.

The largest statistics target among the columns being analyzed determines the number of table rows

sampled to prepare the statistics. Increasing the target causes a proportional increase in the time and

space needed to do ANALYZE.

Compatibility

SQL92

There is no ANALYZE statement in SQL92.

2

BEGIN

Name

BEGIN — start a transaction block

Synopsis

BEGIN [ WORK | TRANSACTION ]

Inputs

WORK

TRANSACTION

Optional keywords. They have no effect.

Outputs

BEGIN

This signifies that a new transaction has been started.

WARNING: BEGIN: already a transaction in progress

This indicates that a transaction was already in progress. The current transaction is not affected.

Description

By default, PostgreSQL executes transactions in unchained mode (also known as “autocommit” in

other database systems). In other words, each user statement is executed in its own transaction and

a commit is implicitly performed at the end of the statement (if execution was successful, otherwise

a rollback is done). BEGIN initiates a user transaction in chained mode, i.e., all user statements after

BEGIN command will be executed in a single transaction until an explicit COMMIT or ROLLBACK.

Statements are executed more quickly in chained mode, because transaction start/commit requires

significant CPU and disk activity. Execution of multiple statements inside a transaction is also useful

to ensure consistency when changing several related tables: other clients will be unable to see the

intermediate states wherein not all the related updates have been done.

The default transaction isolation level in PostgreSQL is READ COMMITTED, wherein each query

inside the transaction sees changes committed before that query begins execution. So, you have to

use SET TRANSACTION ISOLATION LEVEL SERIALIZABLE just after BEGIN if you need more

rigorous transaction isolation. (Alternatively, you can change the default transaction isolation level;

see the PostgreSQL Administrator’s Guide for details.) In SERIALIZABLE mode queries will see

1

BEGIN

only changes committed before the entire transaction began (actually, before execution of the first

DML statement in the transaction).

Transactions have the standard ACID (atomic, consistent, isolatable, and durable) properties.

Notes

START TRANSACTION has the same functionality as BEGIN.

Use COMMIT or ROLLBACK to terminate a transaction.

Refer to LOCK for further information about locking tables inside a transaction.

If you turn autocommit mode off, then BEGIN is not required: any SQL command automatically

starts a transaction.

Usage

To begin a user transaction:

BEGIN WORK;

Compatibility

SQL92

BEGIN is a PostgreSQL language extension. There is no explicit BEGIN command in SQL92; trans-

action initiation is always implicit and it terminates either with a COMMIT or ROLLBACK statement.

Note: Many relational database systems offer an autocommit feature as a convenience.

Incidentally, the BEGIN keyword is used for a different purpose in embedded SQL. You are advised

to be careful about the transaction semantics when porting database applications.

SQL92 also requires SERIALIZABLE to be the default transaction isolation level.

2

CHECKPOINT

Name

CHECKPOINT — force a transaction log checkpoint

Synopsis

CHECKPOINT

Description

Write-Ahead Logging (WAL) puts a checkpoint in the transaction log every so often. (To adjust the

automatic checkpoint interval, see the run-time configuration options CHECKPOINT_SEGMENTS

and CHECKPOINT_TIMEOUT.) The CHECKPOINT command forces an immediate checkpoint when

the command is issued, without waiting for a scheduled checkpoint.

A checkpoint is a point in the transaction log sequence at which all data files have been updated

to reflect the information in the log. All data files will be flushed to disk. Refer to the PostgreSQL

Administrator’s Guide for more information about the WAL system.

Only superusers may call CHECKPOINT. The command is not intended for use during normal opera-

tion.

See Also

PostgreSQL Administrator’s Guide

Compatibility

The CHECKPOINT command is a PostgreSQL language extension.

1

CLOSE

Name

CLOSE — close a cursor

Synopsis

CLOSE cursor

Inputs

cursor

The name of an open cursor to close.

Outputs

CLOSE CURSOR

Message returned if the cursor is successfully closed.

WARNING: PerformPortalClose: portal "cursor" not found

This warning is given if cursor is not declared or has already been closed.

Description

CLOSE frees the resources associated with an open cursor. After the cursor is closed, no subsequent

operations are allowed on it. A cursor should be closed when it is no longer needed.

An implicit close is executed for every open cursor when a transaction is terminated by COMMIT or

ROLLBACK.

Notes

PostgreSQL does not have an explicit OPEN cursor statement; a cursor is considered open when it is

declared. Use the DECLARE statement to declare a cursor.

1

CLOSE

Usage

Close the cursor liahona:

CLOSE liahona;

Compatibility

SQL92

CLOSE is fully compatible with SQL92.

2

CLUSTER

Name

CLUSTER — cluster a table according to an index

Synopsis

CLUSTER indexname ON tablename

Inputs

indexname

The name of an index.

table

The name (possibly schema-qualified) of a table.

Outputs

CLUSTER

The clustering was done successfully.

Description

CLUSTER instructs PostgreSQL to cluster the table specified by table based on the index specified

by indexname. The index must already have been defined on tablename.

When a table is clustered, it is physically reordered based on the index information. Clustering is a

one-time operation: when the table is subsequently updated, the changes are not clustered. That is, no

attempt is made to store new or updated tuples according to their index order. If one wishes, one can

periodically re-cluster by issuing the command again.

Notes

In cases where you are accessing single rows randomly within a table, the actual order of the data in

the heap table is unimportant. However, if you tend to access some data more than others, and there

is an index that groups them together, you will benefit from using CLUSTER.

Another place where CLUSTER is helpful is in cases where you use an index to pull out several rows

from a table. If you are requesting a range of indexed values from a table, or a single indexed value

that has multiple rows that match, CLUSTER will help because once the index identifies the heap page

1

CLUSTER

for the first row that matches, all other rows that match are probably already on the same heap page,

saving disk accesses and speeding up the query.

During the cluster operation, a temporary copy of the table is created that contains the table data in

the index order. Temporary copies of each index on the table are created as well. Therefore, you need

free space on disk at least equal to the sum of the table size and the index sizes.

CLUSTER preserves GRANT, inheritance, index, foreign key, and other ancillary information about

the table.

Because the optimizer records statistics about the ordering of tables, it is advisable to run ANALYZE

on the newly clustered table. Otherwise, the optimizer may make poor choices of query plans.

There is another way to cluster data. The CLUSTER command reorders the original table using the

ordering of the index you specify. This can be slow on large tables because the rows are fetched from

the heap in index order, and if the heap table is unordered, the entries are on random pages, so there

is one disk page retrieved for every row moved. (PostgreSQL has a cache, but the majority of a big

table will not fit in the cache.) The other way to cluster a table is to use

SELECT columnlist INTO TABLE newtable

FROM table ORDER BY columnlist

which uses the PostgreSQL sorting code in the ORDER BY clause to create the desired order; this is

usually much faster than an index scan for unordered data. You then drop the old table, use ALTER

TABLE...RENAME to rename newtable to the old name, and recreate the table’s indexes. However,

this approach does not preserve OIDs, constraints, foreign key relationships, granted privileges, and

other ancillary properties of the table --- all such items must be manually recreated.

Usage

Cluster the employees relation on the basis of its ID attribute:

CLUSTER emp_ind ON emp;

Compatibility

SQL92

There is no CLUSTER statement in SQL92.

2

COMMENT

Name

COMMENT — define or change the comment of an object

Synopsis

COMMENT ON

[

TABLE object_name |

COLUMN table_name.column_name |

AGGREGATE agg_name (agg_type) |

CONSTRAINT constraint_name ON table_name |

DATABASE object_name |

DOMAIN object_name |

FUNCTION func_name (arg1_type, arg2_type, ...) |

INDEX object_name |

OPERATOR op (leftoperand_type, rightoperand_type) |

RULE rule_name ON table_name |

SCHEMA object_name |

SEQUENCE object_name |

TRIGGER trigger_name ON table_name |

TYPE object_name |

VIEW object_name

] IS ’text’

Inputs

object_name, table_name.column_name, agg_name, constraint_name,

func_name, op, rule_name, trigger_name

The name of the object to be be commented. Names of tables, aggregates, domains, functions,

indexes, operators, sequences, types, and views may be schema-qualified.

text

The comment to add.

Outputs

COMMENT

Message returned if the table is successfully commented.

1

COMMENT

Description

COMMENT stores a comment about a database object. Comments can be easily retrieved with psql’s

\dd, \d+, or \l+ commands. Other user interfaces to retrieve comments can be built atop the same

built-in functions that psql uses, namely obj_description() and col_description().

To modify a comment, issue a new COMMENT command for the same object. Only one comment string

is stored for each object. To remove a comment, write NULL in place of the text string. Comments are

automatically dropped when the object is dropped.

Note: There is presently no security mechanism for comments: any user connected to a database

can see all the comments for objects in that database (although only superusers can change

comments for objects that they don’t own). Therefore, don’t put security-critical information in

comments.

Usage

Attach a comment to the table mytable:

COMMENT ON TABLE mytable IS ’This is my table.’;

Remove it again:

COMMENT ON TABLE mytable IS NULL;

Some more examples:

COMMENT ON AGGREGATE my_aggregate (double precision) IS ’Computes sample vari-

ance’;

COMMENT ON COLUMN my_table.my_field IS ’Employee ID number’;

COMMENT ON DATABASE my_database IS ’Development Database’;

COMMENT ON DOMAIN my_domain IS ’Email Address Domain’;

COMMENT ON FUNCTION my_function (timestamp) IS ’Returns Roman Numeral’;

COMMENT ON INDEX my_index IS ’Enforces uniqueness on employee id’;

COMMENT ON OPERATOR ^ (text, text) IS ’Performs intersection of two texts’;

COMMENT ON OPERATOR ^ (NONE, text) IS ’This is a prefix operator on text’;

COMMENT ON RULE my_rule ON my_table IS ’Logs UPDATES of employee records’;

COMMENT ON SCHEMA my_schema IS ’Departmental data’;

COMMENT ON SEQUENCE my_sequence IS ’Used to generate primary keys’;

COMMENT ON TABLE my_schema.my_table IS ’Employee Information’;

COMMENT ON TRIGGER my_trigger ON my_table IS ’Used for R.I.’;

COMMENT ON TYPE complex IS ’Complex Number datatype’;

COMMENT ON VIEW my_view IS ’View of departmental costs’;

2

COMMENT

Compatibility

SQL92

There is no COMMENT in SQL92.

3

COMMIT

Name

COMMIT — commit the current transaction

Synopsis

COMMIT [ WORK | TRANSACTION ]

Inputs

WORK

TRANSACTION

Optional keywords. They have no effect.

Outputs

COMMIT

Message returned if the transaction is successfully committed.

WARNING: COMMIT: no transaction in progress

If there is no transaction in progress.

Description

COMMIT commits the current transaction. All changes made by the transaction become visible to

others and are guaranteed to be durable if a crash occurs.

Notes

The keywords WORK and TRANSACTION are noise and can be omitted.

Use ROLLBACK to abort a transaction.

Usage

To make all changes permanent:

COMMIT WORK;

1

COMMIT

Compatibility

SQL92

SQL92 only specifies the two forms COMMIT and COMMIT WORK. Otherwise full compatibility.

2

COPY

Name

COPY — copy data between files and tables

Synopsis

COPY table [ ( column [, ...] ) ]

FROM { ’filename’ | stdin }

[ [ WITH ]

[ BINARY ]

[ OIDS ]

[ DELIMITER [ AS ] ’delimiter’ ]

[ NULL [ AS ] ’null string’ ] ]

COPY table [ ( column [, ...] ) ]

TO { ’filename’ | stdout }

[ [ WITH ]

[ BINARY ]

[ OIDS ]

[ DELIMITER [ AS ] ’delimiter’ ]

[ NULL [ AS ] ’null string’ ] ]

Inputs

table

The name (possibly schema-qualified) of an existing table.

column

An optional list of columns to be copied. If no column list is specified, all columns will be used.

filename

The absolute Unix path name of the input or output file.

stdin

Specifies that input comes from the client application.

stdout

Specifies that output goes to the client application.

BINARY

Changes the behavior of field formatting, forcing all data to be stored or read in binary format

rather than as text. You can not specify DELIMITER or NULL in binary mode.

OIDS

Specifies copying the internal object id (OID) for each row.

delimiter

The single character that separates fields within each row (line) of the file.

1

COPY

null string

The string that represents a NULL value. The default is “\N” (backslash-N). You might prefer

an empty string, for example.

Note: On a copy in, any data item that matches this string will be stored as a NULL value,

so you should make sure that you use the same string as you used on copy out.

Outputs

COPY

The copy completed successfully.

ERROR: reason

The copy failed for the reason stated in the error message.

Description

COPY moves data between PostgreSQL tables and standard file-system files. COPY TO copies the

contents of a table to a file, while COPY FROM copies data from a file to a table (appending the data to

whatever is in the table already).

If a list of columns is specified, COPY will only copy the data in the specified columns to or from the

file. If there are any columns in the table that are not in the column list, COPY FROM will insert the

default values for those columns.

COPY with a file name instructs the PostgreSQL backend to directly read from or write to a file. The

file must be accessible to the backend and the name must be specified from the viewpoint of the

backend. When stdin or stdout is specified, data flows through the client frontend to the backend.

Tip: Do not confuse COPY with the psql instruction \copy. \copy invokes COPY FROM stdin or

COPY TO stdout, and then fetches/stores the data in a file accessible to the psql client. Thus, file

accessibility and access rights depend on the client rather than the backend when \copy is used.

Notes

COPY can only be used with plain tables, not with views.

The BINARY keyword will force all data to be stored/read as binary format rather than as text. It is

somewhat faster than the normal copy command, but a binary copy file is not portable across machine

architectures.

2

COPY

By default, a text copy uses a tab ("\t") character as a delimiter between fields. The field delimiter

may be changed to any other single character with the keyword DELIMITER. Characters in data fields

that happen to match the delimiter character will be backslash quoted.

You must have select privilege on any table whose values are read by COPY TO, and insert privilege

on a table into which values are being inserted by COPY FROM. The backend also needs appropriate

Unix permissions for any file read or written by COPY.

COPY FROM will invoke any triggers and check constraints on the destination table. However, it will

not invoke rules.

COPY stops operation at the first error. This should not lead to problems in the event of a COPY TO,

but the target relation will already have received earlier rows in a COPY FROM. These rows will not

be visible or accessible, but they still occupy disk space. This may amount to a considerable amount

of wasted disk space if the failure happened well into a large copy operation. You may wish to invoke

VACUUM to recover the wasted space.

Files named in a COPY command are read or written directly by the backend, not by the client appli-

cation. Therefore, they must reside on or be accessible to the database server machine, not the client.

They must be accessible to and readable or writable by the PostgreSQL user (the user ID the server

runs as), not the client. COPY naming a file is only allowed to database superusers, since it allows

reading or writing any file that the backend has privileges to access.

Tip: The psql instruction \copy reads or writes files on the client machine with the client’s per-

missions, so it is not restricted to superusers.

It is recommended that the file name used in COPY always be specified as an absolute path. This

is enforced by the backend in the case of COPY TO, but for COPY FROM you do have the option of

reading from a file specified by a relative path. The path will be interpreted relative to the backend’s

working directory (somewhere below $PGDATA), not the client’s working directory.

File Formats

Text Format

When COPY is used without the BINARY option, the file read or written is a text file with one line per

table row. Columns (attributes) in a row are separated by the delimiter character. The attribute values

themselves are strings generated by the output function, or acceptable to the input function, of each

attribute’s data type. The specified null-value string is used in place of attributes that are NULL. COPY

FROM will raise an error if any line of the input file contains more or fewer columns than are expected.

If OIDS is specified, the OID is read or written as the first column, preceding the user data columns.

(An error is raised if OIDS is specified for a table that does not have OIDs.)

End of data can be represented by a single line containing just backslash-period (\.). An end-of-data

marker is not necessary when reading from a Unix file, since the end of file serves perfectly well; but

an end marker must be provided when copying data to or from a client application.

Backslash characters (\) may be used in the COPY data to quote data characters that might otherwise

be taken as row or column delimiters. In particular, the following characters must be preceded by

a backslash if they appear as part of an attribute value: backslash itself, newline, and the current

delimiter character.

3

COPY

The following special backslash sequences are recognized by COPY FROM:

Sequence Represents

\b Backspace (ASCII 8)

\f Form feed (ASCII 12)

\n Newline (ASCII 10)

\r Carriage return (ASCII 13)

\t Tab (ASCII 9)

\v Vertical tab (ASCII 11)

\digits Backslash followed by one to three octal digits

specifies the character with that numeric code

Presently, COPY TO will never emit an octal-digits backslash sequence, but it does use the other se-

quences listed above for those control characters.

Never put a backslash before a data character N or period (.). Such pairs will be mistaken for the

default null string or the end-of-data marker, respectively. Any other backslashed character that is not

mentioned in the above table will be taken to represent itself.

It is strongly recommended that applications generating COPY data convert data newlines and car-

riage returns to the \n and \r sequences respectively. At present (PostgreSQL 7.2 and older versions)

it is possible to represent a data carriage return without any special quoting, and to represent a data

newline by a backslash and newline. However, these representations will not be accepted by default

in future releases.

Note that the end of each row is marked by a Unix-style newline ("\n"). Presently, COPY FROM will not

behave as desired if given a file containing DOS- or Mac-style newlines. This is expected to change

in future releases.

Binary Format

The file format used for COPY BINARY changed in PostgreSQL v7.1. The new format consists of a

file header, zero or more tuples, and a file trailer.

File Header

The file header consists of 24 bytes of fixed fields, followed by a variable-length header extension

area. The fixed fields are:

Signature

12-byte sequence PGBCOPY\n\377\r\n\0 --- note that the null is a required part of the signa-

ture. (The signature is designed to allow easy identification of files that have been munged by a

non-8-bit-clean transfer. This signature will be changed by newline-translation filters, dropped

nulls, dropped high bits, or parity changes.)

Integer layout field

int32 constant 0x01020304 in source’s byte order. Potentially, a reader could engage in byte-

flipping of subsequent fields if the wrong byte order is detected here.

Flags field

int32 bit mask to denote important aspects of the file format. Bits are numbered from 0 (LSB) to

31 (MSB) --- note that this field is stored with source’s endianness, as are all subsequent integer

4

COPY

fields. Bits 16-31 are reserved to denote critical file format issues; a reader should abort if it finds

an unexpected bit set in this range. Bits 0-15 are reserved to signal backwards-compatible format

issues; a reader should simply ignore any unexpected bits set in this range. Currently only one

flag bit is defined, and the rest must be zero:

Bit 16

if 1, OIDs are included in the dump; if 0, not

Header extension area length

int32 length in bytes of remainder of header, not including self. In the initial version this will be

zero, and the first tuple follows immediately. Future changes to the format might allow additional

data to be present in the header. A reader should silently skip over any header extension data it

does not know what to do with.

The header extension area is envisioned to contain a sequence of self-identifying chunks. The flags

field is not intended to tell readers what is in the extension area. Specific design of header extension

contents is left for a later release.

This design allows for both backwards-compatible header additions (add header extension chunks, or

set low-order flag bits) and non-backwards-compatible changes (set high-order flag bits to signal such

changes, and add supporting data to the extension area if needed).

Tuples

Each tuple begins with an int16 count of the number of fields in the tuple. (Presently, all tuples in a

table will have the same count, but that might not always be true.) Then, repeated for each field in the

tuple, there is an int16 typlen word possibly followed by field data. The typlen field is interpreted

thus:

Zero

Field is NULL. No data follows.

> 0

Field is a fixed-length data type. Exactly N bytes of data follow the typlen word.

-1

Field is a varlena data type. The next four bytes are the varlena header, which contains the

total value length including itself.

< -1

Reserved for future use.

For non-NULL fields, the reader can check that the typlen matches the expected typlen for the

destination column. This provides a simple but very useful check that the data is as expected.

5

COPY

There is no alignment padding or any other extra data between fields. Note also that the format does

not distinguish whether a data type is pass-by-reference or pass-by-value. Both of these provisions

are deliberate: they might help improve portability of the files (although of course endianness and

floating-point-format issues can still keep you from moving a binary file across machines).

If OIDs are included in the dump, the OID field immediately follows the field-count word. It is a

normal field except that it’s not included in the field-count. In particular it has a typlen --- this will

allow handling of 4-byte vs 8-byte OIDs without too much pain, and will allow OIDs to be shown as

NULL if that ever proves desirable.

File Trailer

The file trailer consists of an int16 word containing -1. This is easily distinguished from a tuple’s

field-count word.

A reader should report an error if a field-count word is neither -1 nor the expected number of columns.

This provides an extra check against somehow getting out of sync with the data.

Usage

The following example copies a table to standard output, using a vertical bar (|) as the field delimiter:

COPY country TO stdout WITH DELIMITER ’|’;

To copy data from a Unix file into the country table:

COPY country FROM ’/usr1/proj/bray/sql/country_data’;

Here is a sample of data suitable for copying into a table from stdin (so it has the termination

sequence on the last line):

AF AFGHANISTAN

AL ALBANIA

DZ ALGERIA

ZM ZAMBIA

ZW ZIMBABWE

\.

Note that the white space on each line is actually a TAB.

The following is the same data, output in binary format on a Linux/i586 machine. The data is shown

after filtering through the Unix utility od -c. The table has three fields; the first is char(2), the

second is text, and the third is integer. All the rows have a null value in the third field.

0000000 P G B C O P Y \n 377 \r \n \0 004 003 002 001

0000020 \0 \0 \0 \0 \0 \0 \0 \0 003 \0 377 377 006 \0 \0 \0

0000040 A F 377 377 017 \0 \0 \0 A F G H A N I S

0000060 T A N \0 \0 003 \0 377 377 006 \0 \0 \0 A L 377

0000100 377 \v \0 \0 \0 A L B A N I A \0 \0 003 \0

0000120 377 377 006 \0 \0 \0 D Z 377 377 \v \0 \0 \0 A L

0000140 G E R I A \0 \0 003 \0 377 377 006 \0 \0 \0 Z

6

COPY

0000160 M 377 377 \n \0 \0 \0 Z A M B I A \0 \0 003

0000200 \0 377 377 006 \0 \0 \0 Z W 377 377 \f \0 \0 \0 Z

0000220 I M B A B W E \0 \0 377 377

Compatibility

SQL92

There is no COPY statement in SQL92.

The following syntax was used by pre-7.3 applications and is still supported:

COPY [ BINARY ] table [ WITH OIDS ]

FROM { ’filename’ | stdin }

[ [USING] DELIMITERS ’delimiter’ ]

[ WITH NULL AS ’null string’ ]

COPY [ BINARY ] table [ WITH OIDS ]

TO { ’filename’ | stdout }

[ [USING] DELIMITERS ’delimiter’ ]

[ WITH NULL AS ’null string’ ]

7

CREATE AGGREGATE

Name

CREATE AGGREGATE — define a new aggregate function

Synopsis

CREATE AGGREGATE name ( BASETYPE = input_data_type,

SFUNC = sfunc, STYPE = state_type

[ , FINALFUNC = ffunc ]

[ , INITCOND = initial_condition ] )

Inputs

name

The name (optionally schema-qualified) of an aggregate function to create.

input_data_type

The input data type on which this aggregate function operates. This can be specified as "ANY"

for an aggregate that does not examine its input values (an example is count(*)).

sfunc

The name of the state transition function to be called for each input data value. This is normally

a function of two arguments, the first being of type state_type and the second of type in-

put_data_type. Alternatively, for an aggregate that does not examine its input values, the

function takes just one argument of type state_type. In either case the function must return

a value of type state_type. This function takes the current state value and the current input

data item, and returns the next state value.

state_type

The data type for the aggregate’s state value.

ffunc

The name of the final function called to compute the aggregate’s result after all input data has

been traversed. The function must take a single argument of type state_type. The output data

type of the aggregate is defined as the return type of this function. If ffunc is not specified, then

the ending state value is used as the aggregate’s result, and the output type is state_type.

initial_condition

The initial setting for the state value. This must be a literal constant in the form accepted for the

data type state_type. If not specified, the state value starts out NULL.

1

CREATE AGGREGATE

Outputs

CREATE AGGREGATE

Message returned if the command completes successfully.

Description

CREATE AGGREGATE allows a user or programmer to extend PostgreSQL functionality by defin-

ing new aggregate functions. Some aggregate functions for base types such as min(integer) and

avg(double precision) are already provided in the base distribution. If one defines new types or

needs an aggregate function not already provided, then CREATE AGGREGATE can be used to provide

the desired features.

If a schema name is given (for example, CREATE AGGREGATE myschema.myagg ...) then the

aggregate function is created in the specified schema. Otherwise it is created in the current schema

(the one at the front of the search path; see CURRENT_SCHEMA()).

An aggregate function is identified by its name and input data type. Two aggregates in the same

schema can have the same name if they operate on different input types. The name and input data

type of an aggregate must also be distinct from the name and input data type(s) of every ordinary

function in the same schema.

An aggregate function is made from one or two ordinary functions: a state transition function sfunc,

and an optional final calculation function ffunc. These are used as follows:

sfunc( internal-state, next-data-item ) ---> next-internal-state

ffunc( internal-state ) ---> aggregate-value

PostgreSQL creates a temporary variable of data type stype to hold the current internal state of the

aggregate. At each input data item, the state transition function is invoked to calculate a new internal

state value. After all the data has been processed, the final function is invoked once to calculate the

aggregate’s output value. If there is no final function then the ending state value is returned as-is.

An aggregate function may provide an initial condition, that is, an initial value for the internal state

value. This is specified and stored in the database as a field of type text, but it must be a valid external

representation of a constant of the state value data type. If it is not supplied then the state value starts

out NULL.

If the state transition function is declared “strict”, then it cannot be called with NULL inputs. With

such a transition function, aggregate execution behaves as follows. NULL input values are ignored

(the function is not called and the previous state value is retained). If the initial state value is NULL,

then the first non-NULL input value replaces the state value, and the transition function is invoked be-

ginning with the second non-NULL input value. This is handy for implementing aggregates like max.

Note that this behavior is only available when state_type is the same as input_data_type.

When these types are different, you must supply a non-NULL initial condition or use a non-strict

transition function.

2

CREATE AGGREGATE

If the state transition function is not strict, then it will be called unconditionally at each input value,

and must deal with NULL inputs and NULL transition values for itself. This allows the aggregate

author to have full control over the aggregate’s handling of null values.

If the final function is declared “strict”, then it will not be called when the ending state value is NULL;

instead a NULL result will be output automatically. (Of course this is just the normal behavior of strict

functions.) In any case the final function has the option of returning NULL. For example, the final

function for avg returns NULL when it sees there were zero input tuples.

Notes

Use DROP AGGREGATE to drop aggregate functions.

The parameters of CREATE AGGREGATE can be written in any order, not just the order illustrated

above.

Usage

Refer to the chapter on aggregate functions in the PostgreSQL Programmer’s Guide for complete

examples of usage.

Compatibility

SQL92

CREATE AGGREGATE is a PostgreSQL language extension. There is no CREATE AGGREGATE in

SQL92.

3

CREATE CAST

Name

CREATE CAST — define a user-defined cast

Synopsis

CREATE CAST (sourcetype AS targettype)

WITH FUNCTION funcname (argtype)

[ AS ASSIGNMENT | AS IMPLICIT ]

CREATE CAST (sourcetype AS targettype)

WITHOUT FUNCTION

[ AS ASSIGNMENT | AS IMPLICIT ]

Description

CREATE CAST defines a new cast. A cast specifies how to perform a conversion between two data

types. For example,

SELECT CAST(42 AS text);

converts the integer constant 42 to type text by invoking a previously specified function, in this case

text(int4). (If no suitable cast has been defined, the conversion fails.)

Two types may be binary compatible, which means that they can be converted into one another “for

free” without invoking any function. This requires that corresponding values use the same internal

representation. For instance, the types text and varchar are binary compatible.

By default, a cast can be invoked only by an explicit cast request, that is an explicit CAST(x AS

typename), x::typename, or typename(x) construct.

If the cast is marked AS ASSIGNMENT then it can be invoked implicitly when assigning to a column

of the target data type. For example, supposing that foo.f1 is a column of type text, then

INSERT INTO foo(f1) VALUES(42);

will be allowed if the cast from type integer to type text is marked AS ASSIGNMENT, otherwise

not. (We generally use the term assignment cast to describe this kind of cast.)

If the cast is marked AS IMPLICIT then it can be invoked implicitly in any context, whether assign-

ment or internally in an expression. For example, since || takes text arguments,

SELECT ’The time is ’ || now();

will be allowed only if the cast from type timestamp to text is marked AS IMPLICIT. Otherwise

it will be necessary to write the cast explicitly, for example

SELECT ’The time is ’ || CAST(now() AS text);

(We generally use the term implicit cast to describe this kind of cast.)

It is wise to be conservative about marking casts as implicit. An overabundance of implicit casting

paths can cause PostgreSQL to choose surprising interpretations of commands, or to be unable to

resolve commands at all because there are multiple possible interpretations. A good rule of thumb is

1

CREATE CAST

to make a cast implicitly invokable only for information-preserving transformations between types in

the same general type category. For example, the cast from int2 to int4 can reasonably be implicit,

but the cast from float8 to int4 should probably be assignment-only. Cross-type-category casts,

such as text to int4, are best made explicit-only.

To be able to create a cast, you must own the source or the target data type. To create a

binary-compatible cast, you must be superuser (this restriction is made because an erroneous

binary-compatible cast conversion can easily crash the server).

Parameters

sourcetype

The name of the source data type of the cast.

targettype

The name of the target data type of the cast.

funcname(argtype)

The function used to perform the cast. The function name may be schema-qualified. If it is not,

the function will be looked up in the path. The argument type must be identical to the source

type, the result data type must match the target type of the cast.

WITHOUT FUNCTION

Indicates that the source type and the target type are binary compatible, so no function is required

to perform the cast.

AS ASSIGNMENT

Indicates that the cast may be invoked implicitly in assignment contexts.

AS IMPLICIT

Indicates that the cast may be invoked implicitly in any context.

Notes

Use DROP CAST to remove user-defined casts.

Remember that if you want to be able to convert types both ways you need to declare casts both ways

explicitly.

Prior to PostgreSQL 7.3, every function that had the same name as a data type, returned that data type,

and took one argument of a different type was automatically a cast function. This convention has been

abandoned in face of the introduction of schemas and to be able to represent binary compatible casts